This is a part from a series dedicated to TDD and Automated Unit Testing. I will try to do my best to keep this series alive and updated.

The series parts:

What is TDD and Automated Unit Testing Part 1

What is TDD and Automated Unit Testing Part 2

What is TDD and Automated Unit Testing Part 3

On the first part of this series we found answers for questions like:

- Why is testing important?

- How could an experienced coder/developer miss some cases and scenarios and leave them uncovered?

- Why should we make testing automated, isn’t it enough to do it manually?

- Should we implement automated unit testing for the entire system?

- What is TDD?

- What is RGRR or Red Green Red Refactor?

- Why the hassle of doing the last Red?

- What about the way the testing code is written?

- What is AAA?

- What are Testing Frameworks and Test Runners?

Now, the main purpose for this post is to dive in TDD and the world of automated unit testing and have a look on the best practices and the new habits you should gain, abide to and embrace to be able to keep up with that world.

Let’s start with an important note, the best practices to be mentioned in this post are generally useful, not only in the world of TDD and automated unit testing, but also in the daily world of any software developer. So, one of the best advantages you can get while learning and practicing TDD and automated testing is learning new good habits.

That makes it obvious that the rest of this post will be about discussing the best practices and good habits you should learn while working with TDD and automated unit testing.

If you are going to write some code to test a method, it would be helpful to make this method as short and focused as possible (aka: Single Responsibility Principle)

When trying to write some code to test a business logic implementation method, you should keep in mind that this method should be short and focused as much as you can. This would help you so much when writing the testing code and would make it much easier. But what does it mean to make your method short and focused?

It means that you need to divide the business logic into smaller manageable parts and separate these parts into separate methods. Then, you can write testing code for every one of these methods separately. Also, it would be much easier to compile the unit test cases list.

I am sure you heard before about the widely known life concept of “Divide and Conquer”. But, I am also sure that you may have heard about some other software development concept and principle called “Single Responsibility Principle”. Actually, this is the concept we have been referring to since we started this section.

It states that every method of code you write should have only one reason to change. This means that when you start writing a method try to make its logic so focused on only one simple purpose. This way when requested changes time comes, you find that the changes you need to apply touch only one or two or a small number of controllable methods rather than touching one huge method in the core of the entire system.

Ok, but what about testing? I think it is logical that testing a simple method would be easier than testing a complex method.

For example, assume that your system would be a calculator. What would be easier for you, to test one method which takes as parameters; the operation type (add, subtract, multiply, …), the left hand-side operand and the right hand-side operand ……. or to test a group of methods each handles one certain operation?

For the first option, you must write test cases to test all combinations of these parameters beside writing other test cases to make sure that the implemented logic doesn’t confuse one operation to another. For example, the square root operation should only consider the left hand-side operand.

But, for the second option you must write simple defined test cases for each method knowing that there should not be any confusion between operations.

Also, what if a change request time has come and you need to apply a change to only one operation?For the first case it would be like hell to handle the change and considering all other parts of the system calling this method, which would be a quite number of parts and finally reviewing your already written test cases. But for the second case, it wouldn’t be that hard.

What if the method you are trying to test do some stuff like inserting a record in the database?

You are right, a method which do stuff like these is hard to test, but not always. It depends on the way this method is implemented. But first, let me show you the problem with an example which is much simpler than the one you are talking about just to show you that the cause of the problem is not the need to connect to a database, it is farther than that.

Assume that you have a method that just returns a message saying, “Hello system user, the time now is …”. How would you implement this method? Let me answer this question. You may implement it like this:

public string GetWelcomeMessage()

{

return “Hello system user, the time now is ” + DateTime.Now.ToString();

}

Now, let’s write a testing method for this method…… Opps, I can’t even come up with a test case as I don’t have an expected value to compare to at the moment I am writing the test code. Every time the test method runs the expected value should change to reflect the date and time then. So, now what?

Someone may have a good suggestion. He may suggest modifying the method to be as follows:

public string GetWelcomeMessage(DateTime dateAndTime)

{

return “Hello system user, the time now is ” + dateAndTime.ToString();

}

Ok, now you can write a working test case as follows:

public void ShouldReturnAWelcomeMessageWithCurrentDateAndTime()

{

DateTime now = DateTime.Now;

Assert.AreEqual(“Hello system user, the time now is “ + now, GetWelcomeMessage(now));

}

That’s good but it could be better. Keep reading and you will see what I mean.

Dependency Inversion Principle

Dependency Inversion is a principle which states that higher modules should not depend on lower modules. On the other hand, they both should depend on abstractions rather than concretions. This means that if you have a module, and module here could be a method or a class, which depends on another module to be able to carry out its task(s), you should abstract the dependency in a separate layer and then each of these modules should refer to this layer and depend on it rather than depending on each other. To understand what I am trying to say here, let’s check an example.

Do you remember the “GetWelcomeMessage” method from the previous section? We will continue working on it trying to understand what we have in mind up till now.

We have two modules:

- The “GetWelcomeMessage” method

- The “DateTime.Now” property of the “DateTime” class

The first module is dependent on the second one as the first one can’t do what it should do without using the second module, this means that we have a dependency here.

To break this dependency, we need to abstract the dependency into a third layer and since this layer is by definition an abstract layer, we can make it an interface.

So, this means that we should have an interface like this:

public interface IDateTimeProvider

{

DateTime Now { get; };

}

That’s good, now, let’s modify the first module so that the dependency on the second module is broken.

public StandardDateTimeProvider : IDateTimeProvider

{

public DateTime Now

{

return DateTime.Now;

}

}

public string GetWelcomeMessage()

{

return “Hello system user, the time now is ”

+ new StandardDateTimeProvider().Now.ToString();

}

This way, the first module is no longer dependent on the second module. It is now dependent on another abstract layer which could be replaced by more than one concrete implementation and the choice of that concrete implementation could be considered.

Dependency Injection Technique and Stubs

After applying dependency inversion principle and inverting the dependency as we did in the previous section, we can now proceed to the next step. Now when we have an abstract layer of dependency, we can make the “GetWelcomeMessage” method be able to focus on its main task rather than any side tasks. So, let’s ask ourselves, what is the main task of the “GetWelcomeMessage” method in its final shape? Let me answer this question. Its main task is to return a well formatted message with some welcome message and a date and time, but, not some certain date and time, just the date and time the “StandardDateTimeProvider” class can provide it with.

But, what if I need to be able to exchange this “StandardDateTimeProvider” class with another, maybe because at some other places of the world the standard DateTime.Now will not return the date I want. Or maybe because I need to write some test code and I need to have some fixed value for date and time. So, what can I do about it?

Here comes the dependency injection technique. You can modify the “GetWelcomeMessage” method so that it takes an instance of any class which implements the interface “IDateTimeProvider” and then make the method uses this class instance rather than using the hardcoded “StandardDateTimeProvider” class instance. It would be like this:

public string GetWelcomeMessage(IDateTimeProvider dateTimeProvider)

{

return “Hello system user, the time now is ” + dateTimeProvider.Now.ToString();

}

This way, at runtime we can just inject an instance of any class implementing the “IDateTimeProvider” interface and deciding which class to use could be done based on any kind of conditions or any purpose we have.

For example, to test the final “GetWelcomeMessage” method, we can just do as follows:

public DummyDateTimeProviderForTesting : IDateTimeProvider

{

public DateTime Now

{

return DateTime.Parse(“2018/01/27 10:50:00”);

}

}

public void ShouldReturnAWelcomeMessageWithCurrentDateAndTime()

{

DateTime now = new DummyDateTimeProviderForTesting().Now;

Assert.AreEqual(“Hello system user, the time now is “

+ now, GetWelcomeMessage(now));

}

This is great, right? Sure, it is great and let me tell you about something. Defining the new “DummyDateTimeProviderForTesting” class and using it for testing is what we call a Stub. The stub here is the “DummyDateTimeProviderForTesting” class and it means that you provided a fake implementation of a dependency of the method you are testing so that you can have expected results which you have full control on.

Let’s make it harder and see if the same concepts can hold on.

Back to the database example, what if the method you are trying to test do some stuff like inserting a record in the database?

Assume that the module you are trying to test is as follows:

public class EmployeeRepository

{

public int CreateEmployee(Employee employee)

{

//do some serious stuff and add the employee to the database and return its id

}

public Employee GetEmployeeById(int id)

{

//do some serious stuff and retrieve the employee from the database

}

}

public class EmployeesManager

{

public Employee RegisterEmployeeToSystem(Employee employee)

{

EmployeeRepository employeeRepository = new EmployeeRepository();

return employeeRepository.GetEmployeeById(

employeeRepository.CreateEmployee(employee));

}

}

Now, you need to write some test code to test the “RegisterEmployeeToSystem” method. So, following the same principles and guidelines:

public interface IEmployeeRespository

{

int CreateEmployee(Employee employee);

Employee GetEmployeeById(int id);

}

public class EmployeeRepository : IEmployeeRespository

{

public int CreateEmployee(Employee employee)

{

//do some serious stuff and add the employee to the database and return its id

}

public Employee GetEmployeeById(int id)

{

//do some serious stuff and retrieve the employee from the database

}

}

public class EmployeesManager

{

private IEmployeeRepository employeeRepository;

public EmployeesManager(IEmployeeRespository employeeRespository)

{

this.employeeRespository = employeeRespository;

}

public Employee RegisterEmployeeToSystem(Employee employee)

{

return employeeRepository.GetEmployeeById(

employeeRepository.CreateEmployee(employee));

}

}

public class DummyEmployeeRepositoryForTesting : IEmployeeRespository

{

private List employees = List();

private int counter = 0;

public int CreateEmployee(Employee employee)

{

counter++;

Employee clone = Clone(employee);

clone.Id = counter;

employees.Add(clone);

}

public Employee GetEmployeeById(int id)

{

return employees.First(emp => emp.Id === id);

}

private Employee Clone(Employee employee)

{

//return a deep copy of the input employee

}

}

public void ShouldAddAnEmployeeAndRetrieveItSuccessfully()

{

//Arrange

DummyEmployeeRepositoryForTesting dummyRepo = new DummyEmployeeRepositoryForTesting();

EmployeesManager employeesManager = new EmployeesManager(dummyRepo);

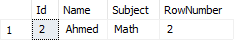

Employee employeeToAdd1 = new Employee() { Name = “Ahmed”, Age = 33 };

Employee employeeToAdd2 = new Employee() { Name = “Mohamed”, Age = 25 };

//Act

Employee employeeRetrieved1 = employeesManager.RegisterEmployeeToSystem(employeeToAdd1);

Employee employeeRetrieved2 = employeesManager.RegisterEmployeeToSystem(employeeToAdd2);

//Assert

Assert.IsTrue(employeeRetrieved1 !== null); Assert.AreEqual(1, employeeRetrieved1.Id);

Assert.AreEqual(employeeToAdd1.Name, employeeRetrieved1.Name);

Assert.AreEqual(employeeToAdd1.Age, employeeRetrieved1.Age);

Assert.IsTrue(employeeRetrieved2 !== null); Assert.AreEqual(2, employeeRetrieved2.Id);

Assert.AreEqual(employeeToAdd2.Name, employeeRetrieved2.Name);

Assert.AreEqual(employeeToAdd2.Age, employeeRetrieved2.Age);

}

Ok, I believe that this was a good example to demonstrate the power of using these concepts and best practices, but, before leaving for today I should tell you that there is more to come.

For example, using stubs is not the only way to achieve what we have done up till now. There is something else called “Mocks” that is so powerful and you should know about and that’s why there would be a section on “Mocks” on the next part of the series.

That’s the end for today and see you on the next part.

Note:

All code samples included in this post are written on a text editor not an IDE, so, be a good spirit and don’t judge it if you find any mistakes 😊